How to Scale AI Without Breaking Your Infrastructure in 2025...

Read MoreWhat is a Large Language Model and How Does it Work?

Table of Contents

ToggleBeyond the buzzword, the LLM introduces an innovative approach to the use of AI in content creation, providing a fresh approach that delves deeply into the complexities of artificial intelligence.

LLMs are a monument to the revolutionary power of language in this age of technological advancement. HubSpot reports 42% of marketers use AI-powered content creation tools. They offer a complex and subtle tool that goes beyond the ordinary and genuinely changes the way we view and interact with content production.

In this article, we will define the meaning of LLMs and explore real-world use cases.

What is a Large Language Model?

The ultimate form of artificial intelligence is embodied in a Large Language Model (LLM), which transforms language creation and interpretation by including sophisticated Transformer networks in its structure.

Large-scale neural networks are a defining characteristic of LLMs since they allow them to handle enormous amounts of input and catch complex language subtleties. With their exceptional comprehension of context, context changes, and contextual dependencies, these models are well-suited for a variety of tasks, from translating and finishing texts to creating original material.

Neural architecture, a complex web of linked nodes that forms the basis of a large language model, allows the model to learn and mimic the nuances of human language. These models gain a profound comprehension of predicting the next word, grammar, syntax, and semantics by training on enormous datasets, which enables them to produce content that is coherent and contextually relevant.

Beyond their ability to speak a language, LLMs are extremely adaptable and have applications in a wide range of domains, including content generation, natural language processing, and conversational AI. As a result, they are playing a significant role in changing the face of human-computer interaction.

What do Transformer Networks Mean?

In the 2017 study “Attention is All You Need” by Vaswani, Google researchers presented the original Transformer model. The transformer architecture, which is now essential to machine learning and natural language processing, was first proposed in this study.

Fundamental to the LLm breakthrough is the Transformer’s attention mechanism, which enables the LLM to concentrate on various segments of the input sequences, therefore capturing contextual subtleties and long-range relationships that are essential for precise language processing.

Transformers do not rely on sequential processing, in contrast to conventional recurrent neural networks (RNNs) or long short-term memory networks (LSTMs). Rather, they handle input data in parallel, which greatly increases computational efficiency and makes it possible to model long-range dependencies. This architecture is now the basis for many other applications, such as text summarization, machine translation, picture recognition, and more. Transformer networks have become widely used due to their adaptability and scalability, which have aided in advancements across many artificial intelligence domains.

What are the Main Components of Large Language Models?

- Neural Architecture: Transformers serve as the foundation for the neural architecture of large language models. By utilising attention processes, these models are able to concentrate on certain segments of input sequences. A sophisticated processing and interpretation of language depends on this architectural base.

- Training Dataset: A variety of text corpora are used to thoroughly train large language models. The models get a thorough grasp of grammar, syntax, and semantics via this training dataset, which exposes them to a variety of language patterns, styles, and settings.

- Pre-training and Fine-tuning: To build a basic knowledge of language, Large Language Models go through pre-training on a large dataset. Fine-tuning then takes place on certain datasets that are suited to specific domains or applications, enabling the model to specialise its expertise.

- Contextual Understanding: Text’s long-range interdependence and contextual interactions are well-represented by large language models. Their capacity to produce content that is both coherent and contextually appropriate is one of their many strengths when it comes to natural language processing jobs.

- Memory and Processing Power: For training and inference, Large Language Models require a significant amount of processing power. Large-scale parallel processing is used by them to effectively manage enormous volumes of data.

What are Large Language Model examples?

General-purpose LLMs

- LaMDA (Language Model for Dialogue Applications): Developed by Google AI, LaMDA emphasizes conversational fluency and understanding diverse intentions. It’s known for its ability to engage in open-ended, informative dialogue.

- Megatron-Turing NLG (Natural Language Generation): From NVIDIA, this model excels at generating different creative text formats, like poems, code, scripts, and emails. It aims to empower human creativity through AI assistance.

- Jurassic-1 Jumbo: OpenAI’s offering is known for its factual language understanding and reasoning abilities. It can answer your questions in an informative way, summarizing factual topics and even challenging premises.

Specialized LLMs

- WuDao 2.0: Developed by the Beijing Academy of Artificial Intelligence, this model surpasses human performance on reading comprehension benchmarks. It’s designed for Chinese language processing and understanding.

- GPT-3 for Healthcare: This fine-tuned version from OpenAI focuses on tasks relevant to the healthcare domain, such as medical question answering and generating patient safety reports.

- BlenderBot 3: From Facebook AI, this model is specifically trained for engaging in open-ended, casual conversations. It thrives on humor, chit-chat, and personal interactions.

Accessible LLMs

- Gemini: That’s me! I’m Google AI’s accessible LLM, trained on a massive dataset of text and code. I strive to be informative and comprehensive in my responses, assisting users with various tasks and questions.

- Hugging Face Transformers: This open-source library provides access to diverse pre-trained LLMs for research and experimentation. It empowers developers to explore and utilize the potential of LLMs in their projects.

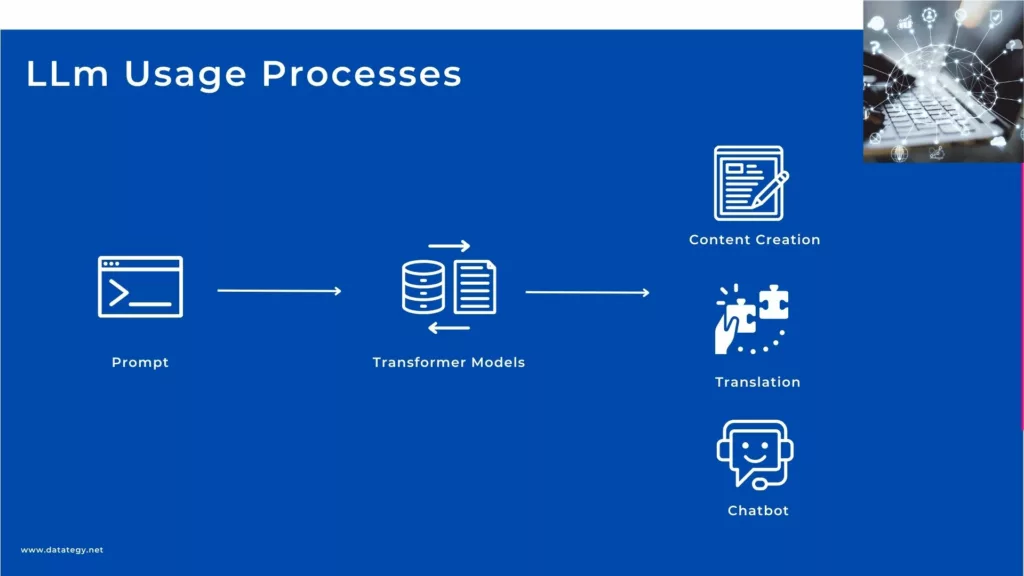

What are the Real-World use cases of LLms

Content Creation

Large Language Models (LLMs) are becoming indispensable tools for content creators, enabling them to easily create interesting content across a variety of platforms. These models are excellent at producing persuasive blog posts, articles, and marketing material with a style that closely resembles that of real people.

Thanks to their thorough training on large datasets, LLMs are able to interpret complex instructions and provide outputs that are appropriate for the situation. This helps marketers and content creators create compelling copy for a variety of uses.

Offering a blend of linguistic finesse and adaptability, LLMs streamline the content creation process, saving time and resources for both individuals and businesses. This can be seen in the creation of catchy taglines, informative product descriptions, or even entire creative writing pieces.

Translation Services

By understanding the nuances of language and context, LLms models—which are frequently based on sophisticated transformer architectures—have completely changed the translation process. Beyond simple word-for-word translation, LLMs are adept at grasping complex linguistic subtleties, allowing them to provide contextually correct translations.

This level of complexity guarantees that the translated information preserves the cultural and contextual depth that is inherent in the source language while still retaining its original meaning.

When companies and individuals use LLMs for translation services, their productivity increases and their multilingual communication becomes more high-quality, which facilitates engagement with international audiences and markets.

Code Generation

Large Language Models (LLMs) are vital tools for developers in the dynamic field of code generation, providing support throughout the complex field of software development.

Constructed using advanced transformer topologies, these models infuse coding with a hint of magic by translating intricate programming languages and generating brief code segments at the mere command.

Imagine LLMs as master coders, offering perceptive autocomplete recommendations that not only speed up coding but also improve the overall quality of the codebase. They are similar to experienced code mentors because of their talent for deciphering syntactic complexities and contextual cues, assisting developers in leading more productive and error-free programming endeavours.

Personal Assistants

Because of their mathematical foundations, LLMs are not just responders but also flexible partners in user support. Through the integration of contextual data and the extraction of learning patterns from large datasets, these models aid in the ongoing enhancement of personal assistant features.

The combination of artificial intelligence and scientific rigour in personal assistants portends a future in which human-machine interactions are marked by increased accuracy, context-aware replies, and a developing ability to comprehend and satisfy user wants.

Sentiment Analysis

Large Language Models (LLMs) are powerful tools in the field of sentiment analysis that use natural language processing skills to identify and represent the emotional tone that is present in textual utterances.

These models have a remarkable ability to capture subtle language nuances and contextual information, enabling accurate sentiment polarity identification across a range of emotions. LLMs are essential tools that help organizations understand current attitudes by turning large amounts of textual data into actionable insights and analyzing everything from social media chatter and consumer reviews to public opinion surveys.

Chatbot

Large Language Models (LLMs)-powered chatbots in the field of conversational artificial intelligence reflect a smart fusion of modern technology and linguistics. LLMs allow chatbots to converse with consumers in a sophisticated and contextually appropriate manner since they are supported by complex transformer structures.

The previous models exhibit the capacity to comprehend the nuances of language, ascertain the intentions of the user, and produce logical and cohesive replies, thereby facilitating an engaging and organic dialogue.

In order to achieve conversational richness and coherence, chatbots must be able to handle a wide range of inquiries and preserve context during a discussion. By utilizing their self-attention processes, LLMs adjust dynamically to user inputs.

Large Language Models (LLMs) are pivotal, transforming information handling with precise language understanding and generation.

Eric CHAU

CTO-Datategy

Why Fine-tunning LLms in so Important?

Optimizing Large Language Models (LLMs) is a powerful tactic to customize these strong language instruments into custom solutions that exactly match particular needs.

LLMs (like OpenAI’s GPT-3) are first pre-trained on large and varied datasets, so they have a general knowledge of language but might not have domain-specific subtleties. By subjecting the model to domain-specific data, fine-tuning enables users to focus the model’s attention and improves its ability to provide specialized and contextually relevant material.

Fine-tuning has advantages that go beyond domain specialization. By incorporating organizational principles and ethical issues into the model, users may make sure that the results meet predetermined requirements.

Create your Own fine-tuned LLms Using papAI Platform

With papAI platform, creating your own optimized Large Language Models (LLMs) is now possible. With the use of this creative tool, users may tailor LLMs to their domains and purposes.

Through the use of papAI, people and organizations may curate datasets that are specific to their needs, which makes it easier for LLMs to receive targeted training. By offering an easy-to-use interface, this platform simplifies the process of fine-tuning and makes it easier for users to improve model performance for specific applications.

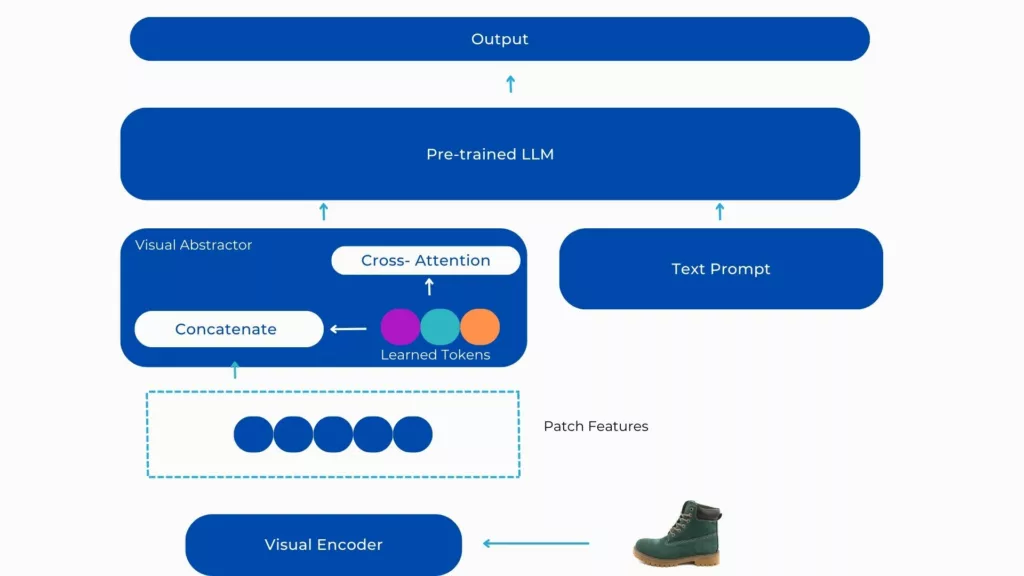

mPLUG-Owl architecture for improved image captioning using LLMs

Watch papAI7's Demo to Expand Your Horizons

The ultimate form of artificial intelligence is embodied in a Large Language Model (LLM), which transforms language creation and interpretation by including sophisticated Transformer networks in its structure.

In the 2017 study “Attention is All You Need” by Vaswani, Google researchers presented the original Transformer model. The transformer architecture, which is now essential to machine learning and natural language processing, was first proposed in this study.

- General-purpose LLMs LaMDA (Language Model for Dialogue Applications): Megatron-Turing NLG (Natural Language Generation): From NVIDIA, Jurassic-1 Jumbo.

- Specialized LLMs: WuDao 2.0, GPT-3, BlenderBot 3

- Accessible LLMs : Gemini, Hugging Face Transformers.

Interested in discovering papAI?

Our AI expert team is at your disposal for any questions

“DATATEGY EARLY CAREERS PROGRAM” With Noé Vartanian

“DATATEGY EARLY CAREERS PROGRAM” With Noé Vartanian Hello all, my...

Read MoreUse AI to Predict Your Customer’s Future Value

Use AI to Predict Your Customer’s Future Value Understanding and...

Read MoreHow Geospatial Analytics Transforms Decision-Making

How Geospatial Analytics Transforms Decision-Making Making the right judgments in...

Read More