How to Scale AI Without Breaking Your Infrastructure in 2025...

Read MoreData Lake Mastery: Best Practices & Use Cases Unveiled

Table of Contents

ToggleUsing the power of information is essential in today’s data-driven environment. The best approach is now clear since the Data Lake offers a centralized location where all necessary data can be easily gathered and used.

“The State of Big Data 2023” by Qlik states that 73% of organizations using data lakes report improved decision-making.

In this article, we’ll find out all about the use of the data lake, its benefits, and the best practices for building it.

What Does Data Lake Mean?

The term “Data lake” refers to a centralized storage system designed with the intention of processing and storing large amounts of unprocessed data in its original format.

A data lake, as opposed to standard data storage solutions, keeps data unstructured and original, allowing organizations to store a variety of information without requiring any previous modification.

This feature’s adaptability is very useful since it makes it possible to store a variety of data kinds, from structured to unstructured, giving an all-encompassing picture of the information landscape of the company.

Data Lake vs Data Warehouse:

The data in a data lake is kept in perfect condition until it’s needed for analysis or other processing jobs.

This method is in contrast to data warehouses, which need structured data to be stored first. The raw data preservation capabilities of the Data Lake enable an analytical approach that is both nimble and scalable.

This allows data scientists and analysts to freely explore, analyze, and draw insights without being constrained by pre-established frameworks. Because of this, businesses can draw significant conclusions and patterns from their data, which supports strategic planning and well-informed decision-making.

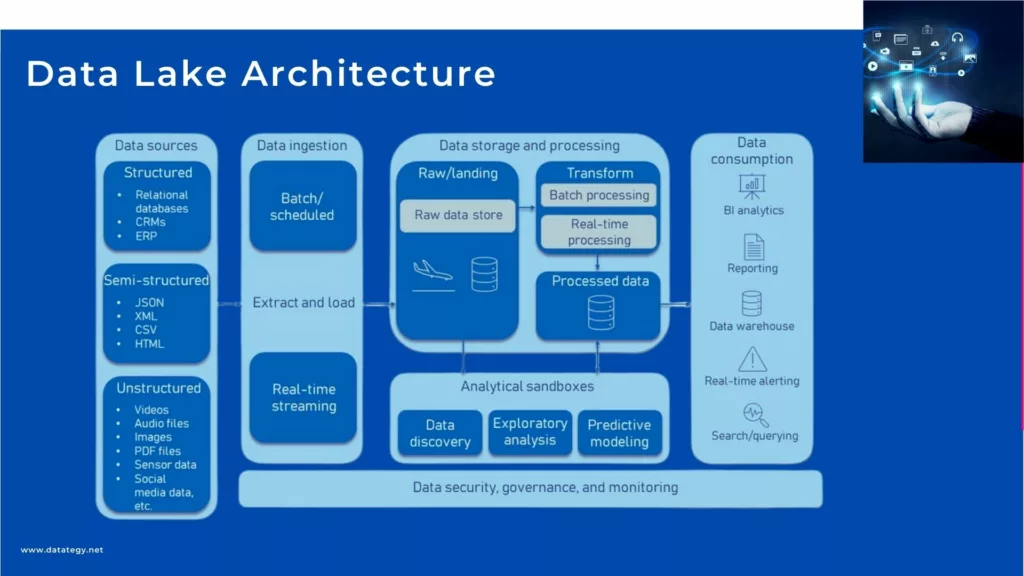

What are the Components of a Data Lake Architecture?

1- Data Lake Architecture's Core Elements

Storage Layer: The storage layer, which houses data in its unprocessed state, is the foundation of any Data Lake design. Scalable and fault-tolerant storage is offered via distributed file systems and object storage, as Hadoop Distributed File System (HDFS), Azure Data Lake Storage, and Amazon S3. This layer is essential for supporting the constantly growing datasets, guaranteeing robustness, and enabling effective data retrieval.

Ingestion Layer: This layer is in charge of effectively supplying the Data Lake with data. It includes frameworks and tools for inputting data in batches as well as in real time. Because of its versatility in handling a wide range of data sources, Apache NiFi and Apache Kafka are frequently used by enterprises to enable them to ingest data at scale and adjust to varying patterns of ingestion.

2- Layer for Processing and Analysis

Data Processing Engines: These engines are the backbone of this layer, allowing raw data to be transformed and analysed. Due to its distributed computing capabilities, Apache Spark, Apache Flink, and Hadoop MapReduce are frequently used to process and analyse massive amounts of data in parallel.

Governance and Security: The foundation of the Data Lake architecture are methods for data governance and security. Implementing compliance procedures, encryption, and access controls helps to guarantee that data is handled safely and compliantly with legal standards. Effective governance makes use of tools like cloud-native identity and access management services like Apache Ranger.

3- Integration and Management Layer

Workflow orchestration: Workflows involving data processing are scheduled and coordinated by technologies such as Apache Airflow or Apache Oozie. By streamlining the completion of difficult data activities, these solutions guarantee effective and automated processes inside the Data Lake.

Monitoring and Management: Tools for monitoring are essential to preserving the Data Lake’s functionality and overall health. Proactive management and optimization are made possible by solutions like Cloudera Manager, Apache Ambari, or cloud-native monitoring services, which offer insights into consumption trends, system performance, and possible bottlenecks.

What are the Benefits of using a Data Lake?

1- Modalities in Data Processing

Flexibility in data processing is one of a data lake’s primary characteristics. As opposed to conventional data warehouses, which demand a predetermined schema prior to data input, a data lake employs a schema-on-read methodology. As a result, raw data may be absorbed without the requirement for quick structure, enabling quick and flexible data analysis. This adaptability is especially useful in settings where data structures and formats change quickly.

2- A Unified Perspective on the Data

By centralising data from several sources, a data lake provides an organisation with a single perspective of its data environment. Through the dismantling of data silos that may exist across various departments or systems, this consolidation offers an all-encompassing view of the organization’s information assets. Better decision-making is facilitated by this unified picture, which gives stakeholders access to a thorough and linked knowledge of the data.

3- Big Data Scalability

A key component of data lakes is scalability, particularly when managing large amounts of data. Data Lakes offer a scalable architecture that can effectively handle and analyse big information as data volumes continue to expand dramatically. The scalable nature of Data Lakes guarantees that organisations can adjust to the changing needs of big data analytics, regardless of whether they encounter sudden spikes in data volume or steady increases over time.

4- Real-time Data Processing

Stream processing frameworks such as Apache Kafka or Apache Flink provide real-time data processing in a data lake. These frameworks facilitate real-time data intake and analysis, allowing organizations to react quickly to changing circumstances and reach well-informed choices almost instantly.

5- Enhanced Data Governance

Establishing and implementing rules and processes to guarantee data security, integrity, and quality is known as data governance inside a data lake. Metadata management, data lineage tracking, and access restrictions are all part of a well-defined governance system.

These steps enhance the data’s credibility, lessen the possibility of data breaches or abuse, and guarantee that legal standards are met. Enhanced data governance fosters trust in the precision and dependability of the data kept in the Data Lake.

6- AI and Machine learning Facilitation

Machine learning (ML) and artificial intelligence (AI) efforts find fertile roots in data lakes. A data lake’s vast and varied collection of data offers ML models plenty of training data. By using this platform, organizations may create and implement AI solutions that automate procedures, identify trends, and make predictions based on data-driven insights.

How to choose the best AI solution for your data project?

In this white paper, we provide an overview of AI solutions on the market. We give you concrete guidelines to choose the solution that reinforces the collaboration between your teams.

Best Practices to Build your Data Lake:

1- Establish Goals and Spectrum

- Give a clear explanation of the objectives and purpose of your data lake.

- Align the organization’s overarching goals with the Data Lake approach.

- Determine the scope, taking into account the expected use cases and the data types to be kept.

2- Build a Robust Foundation

- Describe the Data Lake’s function in relation to your overall data architecture.

- Make sure that the Data Lake is perceived as more than just a place to store information.

- Find integration points by carefully evaluating the current data infrastructure.

3- Create an extensive governance framework

- Establish and put into action data governance policies right away.

- Talk about the security, integrity, and quality of the data.

- For efficient stewardship, set up metadata management, data lineage, and access controls.

4- Streamline Storage Systems

- Adopt cost-effective storage options to strike a balance between performance and cost.

- To improve storage efficiency, investigate partitioning and compression techniques.

- Think about using data tiering to separate archived data from regularly accessible data.

5- Take into Account Various Data Types

- Create the Data Lake with the ability to handle unstructured, semi-structured, and structured data types.

- To be flexible, apply schema-on-read techniques.

- Make sure it works with a variety of data sources and formats.

7- Put Sophisticated Security Mechanisms in Place

- Implement strict security measures to safeguard confidential information.

- Use encryption for both at-rest and in-transit data.

- To verify compliance and find irregularities, audit and monitor access on a regular basis.

How does papAI Enhance the Efficiency of Data Ingestion Processes within a Data Lake Environment?

With a range of capabilities intended to improve productivity, accessibility, and analytics within this intricate data ecology, papAI is a formidable ally in the field of data lakes.

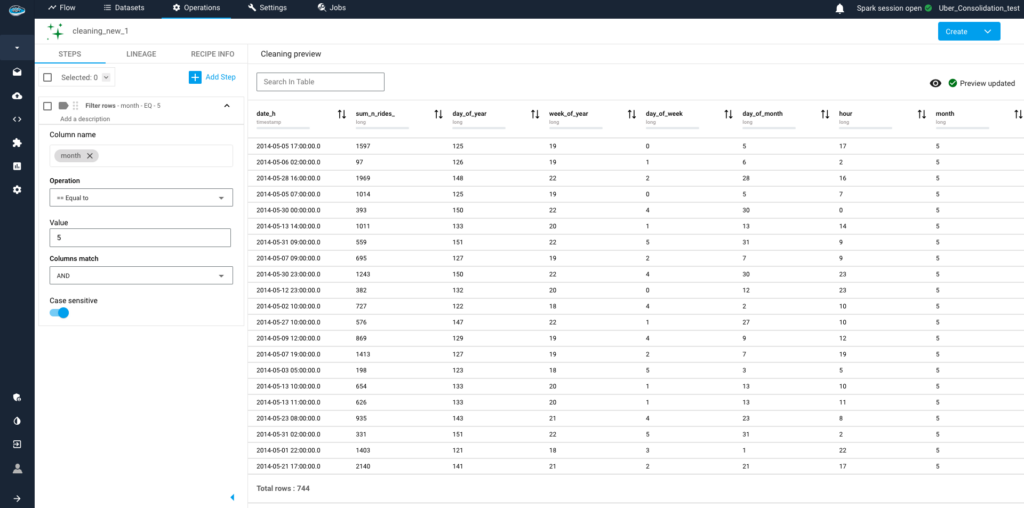

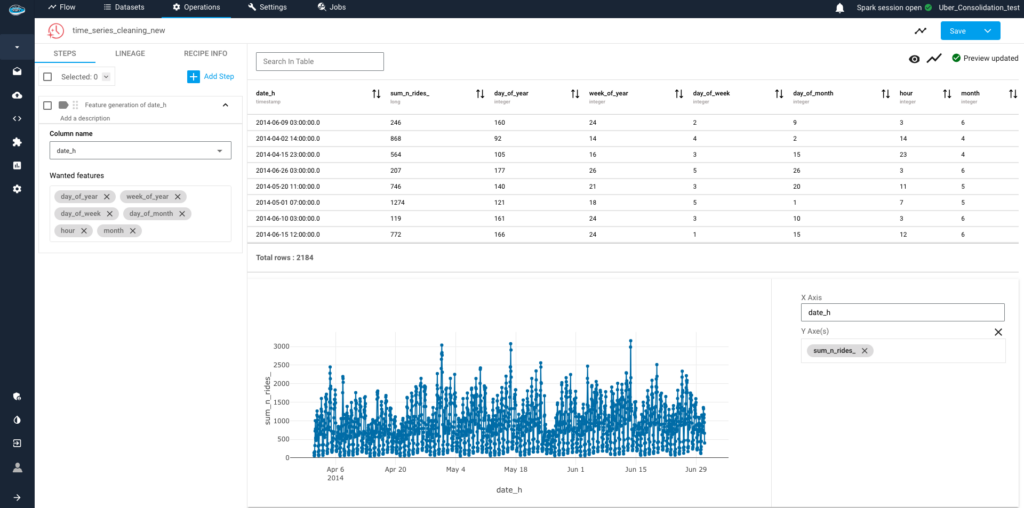

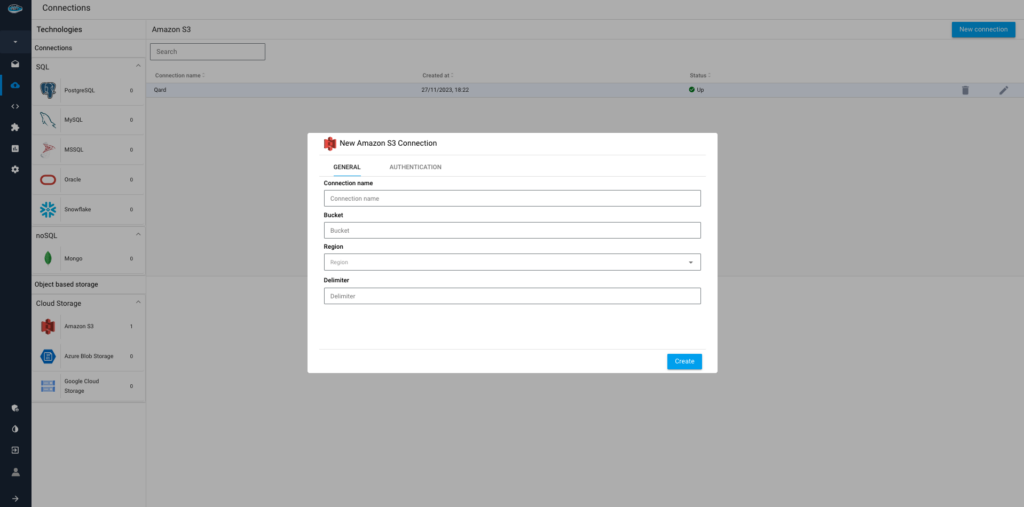

Effective Integration and Ingestion of Data

By offering user-friendly tools and connections, papAI streamlines the data input procedure into the Data Lake. Because of its strong integration capabilities, data from many sources may enter the Data Lake with ease.

These capabilities also optimize the extraction, transformation, and loading (ETL) procedures. The platform allows for the integration of structured, semi-structured, and unstructured data by supporting both batch and real-time ingestion techniques.

Enhanced Annotation Management

One crucial component of Data Lake governance that papAI is excellent at is metadata management. The platform offers a thorough view of the stored data by automatically capturing and cataloging information.

papAI improves data discoverability and comprehension by sophisticated metadata tagging and lineage tracking, enabling users to confidently traverse the Data Lake. Effective data governance, compliance, and openness are guaranteed by this careful metadata management.

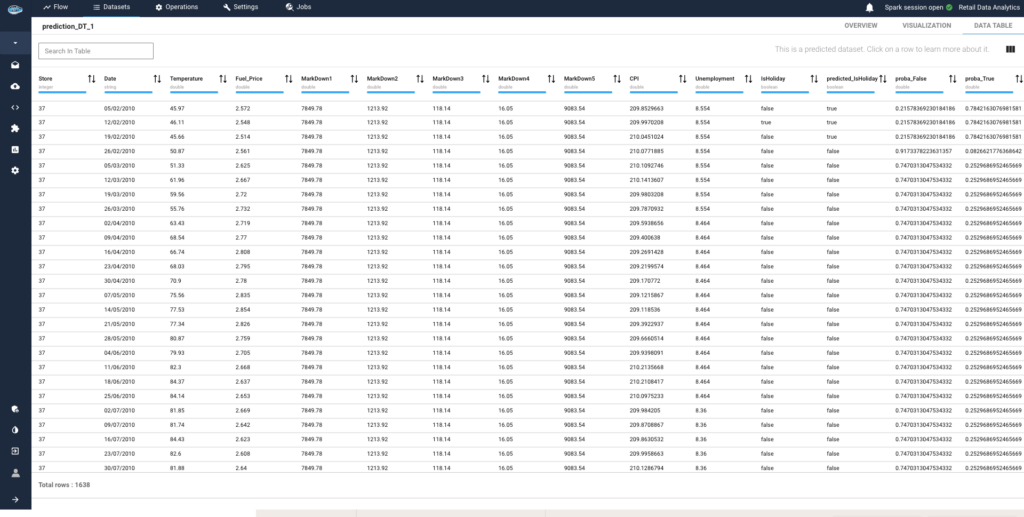

Advanced-Data Analysis and Processing

Modern data processing engines, such as machine learning algorithms and sophisticated analytics tools, are integrated into the platform.

papAI facilitates effective data translation, exploration, and analysis by using the capabilities of Apache Spark and other processing frameworks. The Data Lake’s extensive data reservoirs may be used to extract meaningful insights through predictive modeling and pattern identification made possible by its smooth interface with machine learning libraries.

Cloud service integration and scalability

papAI is built for scalability, it connects with cloud services with ease, allowing businesses to take advantage of the flexibility and scalability of cloud-based computing and storage resources. Because of this, the Data Lake may expand to accommodate the increasing needs of data processing and storage, which makes PapAI a perfect fit for businesses adopting cloud-native architectures.

From Vision to Reality:

Book your AI Consultation on Data Lake Implementation and Discover papAI in Action

In summary, papAI has significant and rewarding transformational potential in the Data Lake context.

papAI is a comprehensive solution for organizations negotiating the intricacies of contemporary data architecture, thanks to its advanced capabilities in data intake, metadata management, collaborative exploration, and adaptive governance.

To fully grasp the potential and observe the immediate advantages for your company, we cordially encourage you to proceed and schedule a customized demonstration session. Our specialists will walk you through the features, respond to your particular questions, and show you how papAI can be customized to meet your particular data issues.

The term “Data lake” refers to a centralized storage system designed with the intention of processing and storing large amounts of unprocessed data in its original format.

1- Data Lake Architecture’s Core Elements

2- Layer for Processing and Analysis

3- Integration and Management Layer

1- Modalities in Data Processing

2- A Unified Perspective on the Data

3- Big Data Scalability

4- Real-time Data Processing

5- Enhanced Data Governance

6- AI and Machine learning Facilitation

1- Establish Goals and Spectrum

2- Build a Robust Foundation

3- Create an extensive governance framework

4- Streamline Storage Systems

5- Take into Account Various Data Types

7- Put Sophisticated Security Mechanisms in Place

Interested in discovering papAI?

Our AI expert team is at your disposal for any questions

“DATATEGY EARLY CAREERS PROGRAM” With Noé Vartanian

“DATATEGY EARLY CAREERS PROGRAM” With Noé Vartanian Hello all, my...

Read MoreUse AI to Predict Your Customer’s Future Value

Use AI to Predict Your Customer’s Future Value Understanding and...

Read MoreHow Geospatial Analytics Transforms Decision-Making

How Geospatial Analytics Transforms Decision-Making Making the right judgments in...

Read More