Why AIOps Is Key to Cyber Threat Detection in Defense?...

Read MoreFrom A to Z: What is MLOps?

Table of Contents

ToggleThe challenges associated with the continued use of machine learning in applications were highlighted early on. MLOps (machine learning operations) are being adopted across various industries, including finance, healthcare, retail, and manufacturing. According to the latest research, the global MLOps market is expected to reach $4.1 billion by 2026.

To discover the full potential of MLOps in your industry, visit our website now.

In this article, we will trace the history and define the meaning of the MLOps, and look at its practical applications.

What is MLOps (Machine Learning Operations)?

In order to effectively deploy and manage machine learning solutions in practical applications, MLOps prioritizes efficiency, scalability, and continual development. Its goal is to close the gap between data science and IT operations.

In 2024. Machine Learning Operations, or MLOps, is described as a comprehensive strategy that combines the fields of operations with machine learning to expedite the creation, implementation, and upkeep of machine learning models. It includes a collection of procedures, instruments, and approaches intended to improve cross-functional teamwork, automate monotonous work in the pipeline for machine learning, and guarantee the smooth integration of models into real-world settings.

The Evolution of MLOps in Recent Years

Over time, MLOps has seen enormous transformations. It first appeared as an addition to DevOps procedures, but as businesses looked to handle the particular difficulties of implementing and maintaining machine learning models in production, it became more and more well-known.

- Early 2000s: This was the period when machine learning was first developing, which sparked improvements in methods and algorithms. A pivotal stage in the development of artificial intelligence and data analytics, this foundational era set the stage for the eventual creation of MLOps.

- 2010-2015 (Rise of Big Data): The explosion of big data between 2010 and 2015 led to a rise in the use of machine learning in data analysis. The difficulties in operationalizing machine learning became apparent when companies struggled to implement models in real-world situations, which opened the door for the subsequent creation of MLOps procedures.

- 2016-2018 (The adoption begins): During the years 2016 to 2018, companies began to understand the specific difficulties associated with implementing and maintaining machine learning models in a production environment. This era signified a pivotal moment in recognizing the necessity for specialized procedures, thereby setting the foundation for the rise and delineation of MLOps as a separate discipline.

- 2017-2020 (Increased interest): The term “MLOps” became well-known in 2017 as businesses looked to handle machine learning’s operational difficulties. In order to improve cooperation and efficiency, MLOps changed between 2017 and 2020. It emphasized automation, continuous integration, and continuous deployment while optimizing the machine learning pipeline.

- 2021-2023 (Focus on scalability): MLOps saw an immense move towards automation between 2021 and 2023, with a particular emphasis on continuous integration and deployment procedures. In order to satisfy the ever-changing needs of the machine learning pipeline, the framework evolved to incorporate new developments in scalability, monitoring, and governance, which improved efficiency and allowed for real-time model performance evaluation.

- 2024 (The stage of maturation): By 2024, MLOps was growing into a strong framework that reflects the industry’s widespread use of standardized procedures. These days, businesses use these procedures to manage the whole lifetime of machine learning models, guaranteeing effective design, implementation, and continuous upkeep for long-term achievement in practical applications.

Who are the Profiles that Use MLOps in a Company?

In a company, various profiles collaborate to implement and utilize MLOps practices. These profiles include:

- Data Scientists:

In MLOps, data scientists are essential because they develop and hone machine learning models. To analyze large datasets, glean insights, and create prediction models, they make use of statistical techniques and programming expertise. By guaranteeing the precision and applicability of these models, data scientists play an important part in the efficient implementation of machine learning solutions inside an organization’s operational structure.

- Data Engineers:

With their focus on creating and managing reliable data pipelines, data engineers play a critical role in MLOps. In order to provide structured datasets for model training and deployment, they must extract, convert, and load (ETL) data. Data engineers have a vital part in the smooth integration of machine learning models into production settings, which optimizes the MLOps process, by guaranteeing the availability and dependability of data.

- DevOps Engineers:

In the field of MLOps, DevOps engineers are experts in combining development and operations to maximize the deployment of machine learning models. They provide continuous integration and deployment (CI/CD), create and manage automated workflows, and guarantee effective communication between data science and IT teams. Through their emphasis on automation, scalability, and system performance, DevOps Engineers are essential to the smooth operationalization of machine learning models.

Efficient MLOps Implementation: 7 Steps to Success

In order to put MLOps into practice, you have to start with exploratory data analysis and preparation, then move on to model tweaking and training. For smooth deployment, use continuous integration/continuous deployment and establish version control for your models. To track model performance and automate retraining, use robust monitoring. Find Below seven steps you need to follow to use MLOps successfully:

Components of MLOps

| Component | Description |

|---|---|

| Exploratory Data Analysis (EDA) | Thoroughly analyze and visualize data to gain insights and inform subsequent model development decisions. |

| Data Preparation and Feature Engineering | Cleanse, preprocess, and engineer features to optimize data for effective machine learning model training. |

| Model Training and Tuning | Develop machine learning models, optimizing parameters to enhance accuracy, generalization, and overall performance. |

| Model Review and Governance | Conduct rigorous reviews, ensuring models adhere to ethical standards, regulatory requirements, and organizational governance policies. |

| Model Inference and Serving | Deploy models into production environments, enabling real-time predictions and serving results to end-users or downstream systems. |

| Model Monitoring | Continuously observe and assess model performance, health, and behavior in real-world scenarios for proactive issue identification. |

| Automated Model Retraining | Implement automated processes to periodically retrain models based on new data, ensuring continued relevance and accuracy. |

How to choose the best AI solution for your data project?

In this white paper, we provide an overview of AI solutions on the market. We give you concrete guidelines to choose the solution that reinforces the collaboration between your teams.

What are the Benefits of MLOps?

A 2023 survey by DevOps Research and Assessment (DORA) found that 58% of organizations have adopted or are piloting MLOps practices. This is up from 42% in 2020. Let’s look at the main advantages of MLOps :

- Efficient Resource Management:

The promise of effective resource management is a key paradigm and is at the heart of MLOps. MLOps reduces resource waste and maximizes utilization through the automation of deployment and scaling procedures, which leads to significant cost savings. This efficiency is consistent across the model creation, training, and deployment phases and is not limited to any one of them. The capacity to expand resources in response to changing demands helps teams, which improves the overall effectiveness of machine learning projects. Effective resource management in MLOps is a tactical advance toward cost-effectiveness and sustainability in the dynamic field of data science.

- Automation and Scalability:

- Model Governance and Compliance:

As we navigate 2024, the practical applications of MLOps resonate profoundly. The ability to automate, govern, and continually improve machine learning workflows ensures sustained relevance and efficiency. This is particularly significant in an era where data-driven decision-making is at the forefront of organizational strategy.

Thibaud Ishacian

Product Owner At Datategy

How does papAI Facilitate MLOps?

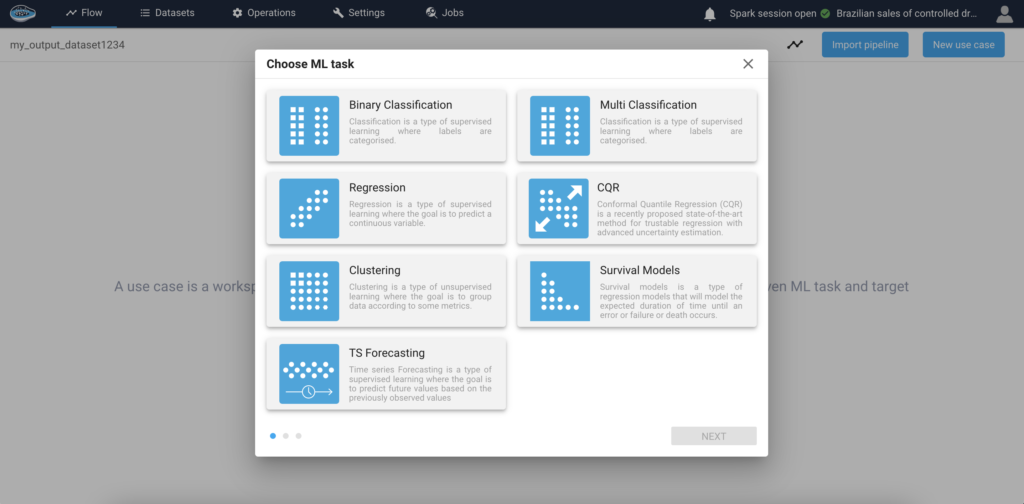

Companies may implement and industrialize AI and data science initiatives using papAI solution. Designed to facilitate teamwork on a single platform, it is inherently collaborative. Teams are able to work together on difficult projects thanks to the platform’s interface. Some of these characteristics are several machine learning techniques, options for model deployment, tools for data exploration and visualization, data cleaning, and pre-processing powers.

- Streamlined Model Deployment

papAI Solution greatly simplifies the installation of machine learning models by using the Model Hub. The main source of pre-built, deployment-ready models from papAI is the Model Hub (Binary Classification, Regression model, Clustering, Ts Forecasting ..). Consequently, companies no longer have to start from zero when developing their models. Our most recent survey shows that users of the papAI solution save 90% of their time when implementing AI projects on average.

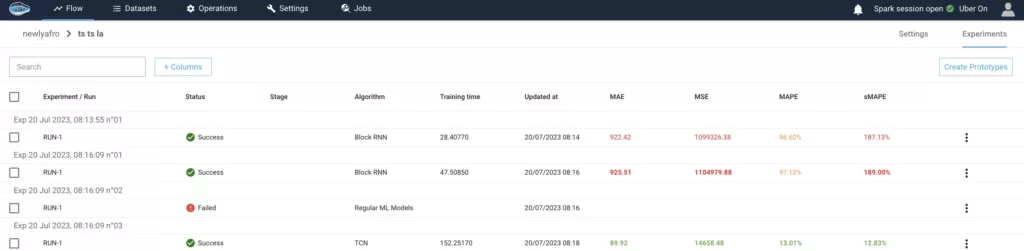

- Effective Model Monitoring:

With the wide range of monitoring tools provided by papAI Solution, organizations can keep an eye on the most crucial model performance metrics in real time. Metrics like accuracy, precision, and other relevant indicators allow users to monitor the model’s performance and identify any potential issues or deviations. This continuous monitoring allows preemptive detection of any decline in model performance, ensuring high-quality outputs. Our clients have reached proven accuracy levels of up to 98%.

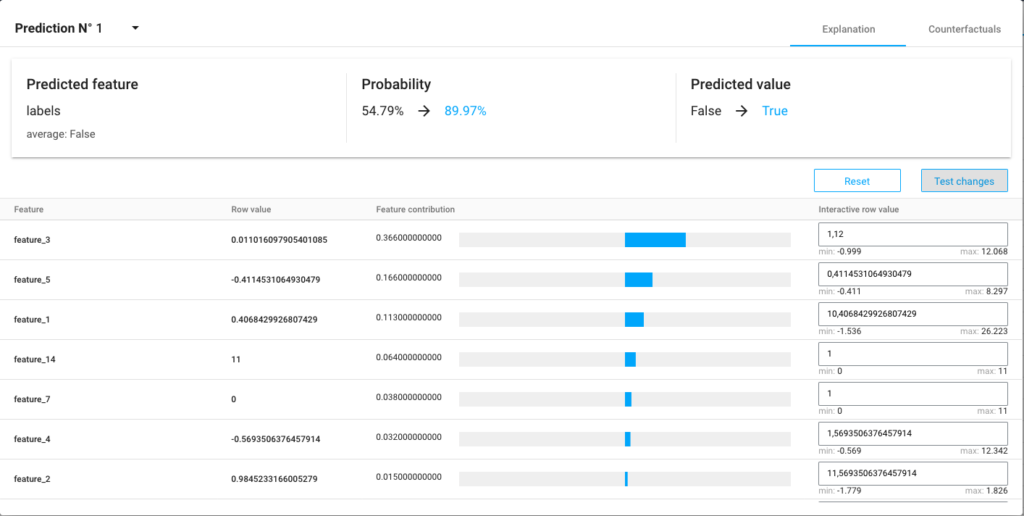

- Improved Explainability and Interpretability of the Model:

Historically, deep learning and complex machine learning models have been perceived as “black boxes,” making it challenging to understand how they produce predictions. The papAI solution takes on this issue head-on by offering state-of-the-art techniques for model explainability. It highlights the essential components and traits that have an impact on projections and provides insights into the inner workings of the models. Stakeholders can now see precisely how AI models decide thanks to papAI, which resolves the mystery of how they predict the future.

Unlock the potential of deploying your AI-based tool with papAI solution

Discover how papAI solution simplifies the deployment and management of machine learning models, empowering you to harness their full potential effortlessly. Our expert team will guide you through papAI’s integration, streamlined workflow, and advanced features, ensuring a comprehensive understanding of its capabilities during the demo. Revolutionize your AI organization’s approach with papAI.

Q&A

In 2024. Machine Learning Operations, or MLOps, is described as a comprehensive strategy that combines the fields of operations with machine learning to expedite the creation, implementation, and upkeep of machine learning models.

In a company, various profiles collaborate to implement and utilize MLOps practices. These profiles include Data Scientists, Data Engineers, and DevOps engineers.

MLOps brings significant advantages to organizations, including streamlined resource management, automated and scalable processes, and robust model governance and compliance.

papAI excels in facilitating MLOps through its seamless model deployment, efficient model monitoring, and enhanced explainability and interpretability, elevating the overall operational efficiency of the system.

Interested in discovering papAI

Our team of AI experts will be happy to answer any questions you may have

How AI Transforms Predictive Maintenance in Defense Equipment

How AI Transforms Predictive Maintenance in Defense Equipment In a...

Read MoreHow to Scale AI Without Breaking Your Infrastructure in 2025

How to Scale AI Without Breaking Your Infrastructure in 2025...

Read More“DATATEGY EARLY CAREERS PROGRAM” With Noé Vartanian

“DATATEGY EARLY CAREERS PROGRAM” With Noé Vartanian Hello all, my...

Read More