Why Should You Consolidate Your AI Tools for Faster Scaling?...

Read MoreHow to Scale AI Without Breaking Your Infrastructure in 2025

Table of Contents

ToggleIn 2025, AI is projected to drive 95% of IT projects. Many organisations now face a new hurdle as AI adoption picks up speed across industries: how to transition from experimental models to enterprise-scale systems without overloading infrastructure, going over budget, or creating operational turmoil.

2025 will be a turning point for scalable AI, not only for those creating more intelligent algorithms but also for those who are adept at coordinating people, data, and computation on a large scale.

In this article, we explore the key trends shaping scalable AI infrastructure, from agentic systems to data pipelines and hybrid cloud architectures. If you’re serious about AI that works in the real world, this is your playbook.

The Reasons AI Scaling Is Not Just a Technical Challenge

Technical factors like computational power, model performance, and cloud strategy may be the first things to be taken into account when scaling AI, but there are other factors to consider. In actuality, your organization’s structure, mentality, and decision-making process present the most difficult obstacles rather than your technology stack. Many businesses fail not because their models don’t work well, but rather because they don’t account for the time and effort required to move from proof-of-concept to enterprise-wide acceptance. People, procedures, and policies are frequently the source of the friction. This is the reason:

– The largest bottleneck is frequently organisational alignment:

The lack of coordination between data teams, IT, and business divisions is one of the most underappreciated obstacles to growing AI. Although the importance of AI is widely acknowledged, few organisations are fundamentally prepared to support it on a large scale. When attempting to grow, what begins as a pilot in one department becomes into an uphill battle. Budgets are out of sync, priorities diverge, and decision-making becomes disjointed.

For instance, while IT enforces strict infrastructure regulations, data scientists may advocate for quick experimentation. As a result, everyone was frustrated and there were delays and rework. Furthermore, there is frequently a gap between vision and implementation as executive leadership supports AI without fully comprehending its long-term operational ramifications.

– Historical Resistance and Data Readiness Reduce the pace of things:

The quality of the data that powers even the most potent models depends on it, and the majority of businesses rely on disjointed, disorganised, or antiquated data pipelines. Even worse, fragmented datasets, redundant systems, and conflicting definitions used by various teams make it challenging to trust AI-driven results.

However, resistance to change is frequently a deeper, cultural issue that goes beyond the data dilemma. Workers may worry that AI may diminish their knowledge or automate their jobs. Supervisors could be hesitant to delegate decision-making authority to algorithms. Even the strongest AI programmes can be subtly undermined by this type of cultural inertia.

– Workflows and Procedures Aren’t Designed to Integrate AI:

A lot of conventional business processes weren’t created with AI in mind. The majority of legacy procedures are designed for delayed approval cycles, batch updates, and manual involvement. Without rethinking these systems, attempting to integrate AI into them can result in malfunctions, inefficiencies, or even legal issues.

Consider customer service as an example. While a chatbot may function beautifully in tests, it produces a fragmented experience if it is unable to immediately enter interactions into the CRM or escalate to a human agent when necessary. The same is true for maintenance planning, fraud detection, and supply chain forecasting; AI insights lose their usefulness if they cannot be implemented into current workflows.

Scaling AI requires reimagining how decisions are made and where automation fits. It also means investing in process engineering, updating SOPs, training staff, and ensuring workflows can accommodate real-time data and model outputs.

Thibaud Ishacian

Head of Product - Datategy

1- Data as a Strategic Asset

The archaic notion that more data inevitably translates into better outcomes is still held by many organisations in the rush to scale AI. By 2025, however, that story is changing. It is now more important to curate the appropriate data, at the appropriate quality, for the appropriate purpose rather than storing raw data in enormous lakes.

Increasingly, the true competitive advantage is intelligent curation. Successful AI scaling companies now prioritise structure, relevance, and freshness over volume. They spend money on versioning, data labelling, and metadata management to ensure that their AI systems are learning from precise and contextual inputs rather than noise.

The emergence of distilled and synthesised data is another significant change. Companies are using synthetic datasets to train models safely and effectively as privacy laws tighten and access to real-world data becomes more difficult (or more costly to clean). Synthetic data is perfect for sectors like healthcare and finance since it replicates genuine patterns without disclosing private information.

Distilled data, on the other hand, helps lower computing load without compromising speed by condensing enormous datasets into representative subsets. These methods are becoming indispensable in the AI arsenal and are no longer considered experimental, particularly when it comes to scaling solutions across business lines or geographical boundaries.

2- Architecting Agentic AI

Greater accountability accompanies increased autonomy. The more decisions we give AI agents, the more important it is to set up governance frameworks that guarantee accountability, safety, and transparency. Agents can behave across time, start conversations, and even learn on the fly, in contrast to standard AI models that have explicit inputs and outputs.

Their behavior is more difficult to assess and predict because of this mobility. Because of this, progressive companies are now giving human-in-the-loop controls, explainability methods, simulation-based testing, and agent monitoring top priority before scale deployment.

Trust is crucial to this progress. No matter how sophisticated the technology is, adoption will stall if staff members and clients don’t comprehend or have faith in how AI agents function. Clear communication, moral principles, and accountability structures are the first steps in establishing trust. It also entails regularly assessing agents’ performance across edge cases and training them with a variety of objective data.

Although it is a significant advancement, agentic AI is a problem for humans and organisations as well as a technical accomplishment. Who succeeds in the AI-driven economy will depend on how well they do it.

3- Infrastructure Overhaul

Effective AI scaling necessitates a complete redesign of the underlying infrastructure, not just smart algorithms. The sheer volume and complexity of AI workloads, which can include enormous data streams and computationally demanding operations, were beyond the capabilities of traditional IT infrastructures.

To fulfil these expectations, businesses are now making significant investments in AI-ready infrastructures, which include high-bandwidth networking, quicker storage options, and specialized hardware like GPUs and TPUs. The goal of this infrastructure update is to provide scalable, adaptable systems that can smoothly support AI models from training to real-time inference, not only speed.

At the same time, the argument between edge and cloud computing is becoming more complex. Many AI applications must function on the edge, closer to the location where data is generated, even though the cloud is still a powerful tool for processing massive amounts of data and training models. Consider smart retail settings, industrial IoT sensors, or driverless cars where milliseconds count. Because of this change, businesses are creating hybrid environments that integrate the best features of both worlds. For example, models can operate locally for speed and privacy, while syncing with cloud systems for heavy lifting and updates.

Companies that invest in adaptable architectures and strike the right balance between cloud and edge computing position themselves to scale AI more efficiently and reliably.

4- Talent and Leadership Gaps

Despite all of the hype around AI, just 1% of organisations are said to have achieved what experts refer to as “AI maturity.” This indicates that they have effectively incorporated AI into their main business operations in a way that continuously adds value and grows in a sustainable manner. For the most majority, talent—rather than technology—is the largest obstacle.

It’s difficult to find qualified data scientists, AI engineers, and machine learning professionals, and even when businesses do, it can be difficult to keep them and match their work with corporate objectives. The lack of skilled AI professionals limits adoption and raises the possibility of unsuccessful pilots and lost money.

However, talent is insufficient on its own. The existence of AI-ready leadership, or managers and executives who genuinely comprehend AI’s potential, constraints, and strategic possibilities, is equally crucial.

These leaders view AI as a cross-functional revolution that calls a clear vision, governance, and change management, rather than merely a tech project. They support AI projects, dismantle organisational silos, and cultivate a culture that values innovation but bases it on quantifiable business results. Even the best AI technology can fail if there are no executives who can connect data teams and business units.

Organizations must invest not only in hiring top-tier AI professionals but also in upskilling existing teams and cultivating leaders with AI fluency.

5- Cost, Efficiency, and ROI

Intelligent deployment across edge and cloud settings is one of the best strategies to control costs while scaling AI. Large-scale data processing and intensive model training benefit greatly from the cloud’s strong computational resources, but storing everything there can quickly drive up costs and cause slowness.

However, edge computing makes it possible to process data in real time closer to the source, which lowers bandwidth requirements and speeds up reaction times for vital applications like autonomous systems or Internet of Things sensors. By combining these strategies, businesses may optimise cost and performance while customising the deployment to meet the specific needs of each use case.

In the end, managing cost and ROI demands requires careful planning and quick implementation. Businesses must constantly evaluate the areas in which AI can have a significant impact before developing adaptable architectures that let them grow gradually as opposed to all at once.

By utilising hybrid cloud-edge models and prioritising efficiency over size, businesses can achieve sustainable growth while maintaining financial stability. Doing more with less is not only a goal, but a requirement in the cutthroat field of artificial intelligence.

What are the AI challenges for Enterprise Deployment?

1- Complex Data Management

One of the main challenges to the adoption of artificial intelligence by corporations is managing massive amounts of data. businesses collect massive statistics from a range of sources, including sensors, social media, transactions, and customer interactions. The fact that the data is often scattered across multiple systems, unstructured, and diverse makes it more challenging to clean, store, and prepare it for machine learning applications.

Another problem that firms deal with is the intricacy of data storage systems. When some data is stored on-site and some in cloud or hybrid environments, it may be challenging to ensure accessibility, security, and consistency across the organisation. It may be difficult to use all of the data available for insights and model training due to data silos caused by an unmanaged data environment.

To overcome these obstacles, businesses can:

- Put in place unified data systems that combine information from several sources into one easily accessible area.

- Use cutting-edge preprocessing and data cleaning techniques to guarantee that your data is clean and prepared for machine learning applications.

- To guarantee uniformity, security, and accessibility across all data sources, create robust data governance structures.

- To store enormous volumes of unstructured data in a scalable and easily accessible manner, use cloud-based data lakes.

2- Silos inside Organisations

One of the biggest challenges for companies utilizing AI is the existence of organizational silos. Teams in businesses are usually divided by department, function, or even location, which can result in communication barriers, inefficient workflows, and misaligned goals. For example, different agendas, work cultures, and platforms may make it difficult for data scientists, DevOps teams, and business divisions to collaborate effectively on machine learning initiatives.

These silos could lead to dispersed AI efforts across the organisation. A data science team may create a powerful machine learning model, but if the team is not adequately coordinated with the operations or IT departments, it might not be successfully deployed in production. Moreover, when teams are not in sync, it is more challenging to implement a single AI approach that enables model building, deployment, monitoring, and maintenance across the organisation.

In order to address organizational silos, big businesses can:

- Establish specialized AI & Data teams that include data scientists, engineers, and business executives to promote cross-functional cooperation.

- To facilitate open communication and information exchange across teams, use collaboration tools like Slack, JIRA, or Confluence.

- Establish a centralized structure for AI governance to bring all teams together around shared objectives and guarantee that AI projects adhere to standard procedures.

- Adopt agile approaches that promote iterative work and continuous team input to keep projects in line with corporate goals.

3- Compliance to Regulations

Organizations, especially those in regulated industries like healthcare, banking, and telecommunications, are very concerned about maintaining regulatory compliance while utilizing machine learning models. Many firms are subject to stringent laws governing data security, privacy, and transparency; HIPAA in the US and GDPR in Europe are two examples. These limitations, which place restrictions on the gathering, storing, processing, and use of data, may limit the flexibility with which companies can apply AI and machine learning.

It might also be difficult to guarantee ongoing compliance when models change over time. Models drift, regulations change, and new data can necessitate fresh compliance evaluations. Businesses can find it difficult to guarantee that their machine learning algorithms stay compliant over time without an appropriate compliance structure in place.

Businesses can take the following actions to resolve regulatory compliance issues:

- Use compliance monitoring technologies to monitor data consumption, create behavioral models, and ensure that applicable regulations are being followed.

- Use explainable AI (XAI) techniques to make sure that models are comprehensible and that stakeholders and regulators can understand the reasoning behind choices.

- To make sure models continue to adhere to changing requirements, audit and update them on a regular basis.

- Work together with the legal and compliance departments to make sure AI initiatives meet the most recent legal specifications.

4- Cost Optimization

Cost optimization is a significant issue for enterprises, particularly when it comes to expanding the use and maintenance of Machine Learning (ML) models. Businesses using AI solutions may find it difficult and expensive to manage the costs associated with data processing, model training, and deployment.

The difficulty of effectively allocating resources while balancing cost and performance is critical to the long-term viability of machine learning initiatives. For large organisations, whose operations and resources might be enormous, concentrating on cost-efficient practices becomes crucial to avoiding overpaying and maximizing the return on investment (ROI) for AI projects. Addressing the Cost Optimization is as follows:

- Adopt a Hybrid AI approach: businesses may use a hybrid AI approach instead of depending just on on-premise infrastructure or cloud services.

- Optimise Data Pipeline Infrastructure: To cut expenses related to maintaining data processing infrastructure, businesses can implement serverless data processing services like AWS Lambda or Google Cloud Functions.

- Review and Optimise Resource Usage Frequently: To make sure that resources are being used efficiently, businesses must audit their AI workflows on a frequent basis.

How to Chose the Right AI Solution for Your Business

Below are key considerations to help guide you in choosing the right AI stack for your business.

1- Comprehend Your Business Needs and Growth Potential

Assessing your business demands and the scale at which you anticipate operating is the first step in choosing the best AI stack. Smaller businesses with less resources will want a solution that is affordable, user-friendly, and expandable.

Seek out products that are flexible enough that your team can utilise and integrate them with little initial outlay of funds. An AI stack that provides cloud-based solutions or lightweight choices may be perfect for small firms just getting started with machine learning, since they can grow with your company without requiring a lot of infrastructure.

2- Evaluate Integration with Existing Systems

How effectively the AI stack integrates with your current infrastructure is another important consideration. Many companies already have processes in place, so switching to a new platform that doesn’t work well with them might cause problems or inefficiencies.

Selecting a stack that facilitates simple integration via APIs and pre-built connectors is crucial for businesses with outdated systems. This eliminates the need for intricate or expensive reconstruction and guarantees a smooth data flow between your AI system and other internal tools.

3- Give flexibility and scalability a priority

The AI stack should be able to grow with your company as it expands. Because of this adaptability, your machine-learning processes will continue to function effectively even as data volumes, model complexity, and processing demands rise.

Companies should seek out AI technologies that enable them to extend their infrastructure and capabilities without undergoing significant overhauls, particularly those with plans for future expansion.

Particularly for small enterprises with limited initial resources, scalable systems that dynamically modify resources based on demand are crucial since they allow you to maximize expenses while expanding.

4- Pay attention to compliance, governance, and security.

Security, governance, and regulatory compliance should be given top priority when choosing an AI stack, particularly for companies operating in highly regulated industries like government, healthcare, or finance.

To prevent legal problems, it is essential to protect sensitive data and make sure that data privacy rules are followed. Choose an AI platform with strong security features like audit trails, access control, and data encryption. These tools will assist in making sure that sensitive data is only accessible by authorized persons and that all actions are recorded for auditing purposes.

Leading the data revolution: CDO role in today's organizations

The need for CDOs is expanding as a result of the growing significance of data in today’s corporate environment. Any organization’s success depends on the CDO, who is the primary force behind digital innovation and change.

Streamlining AI with papAI: Key Benefits and Features

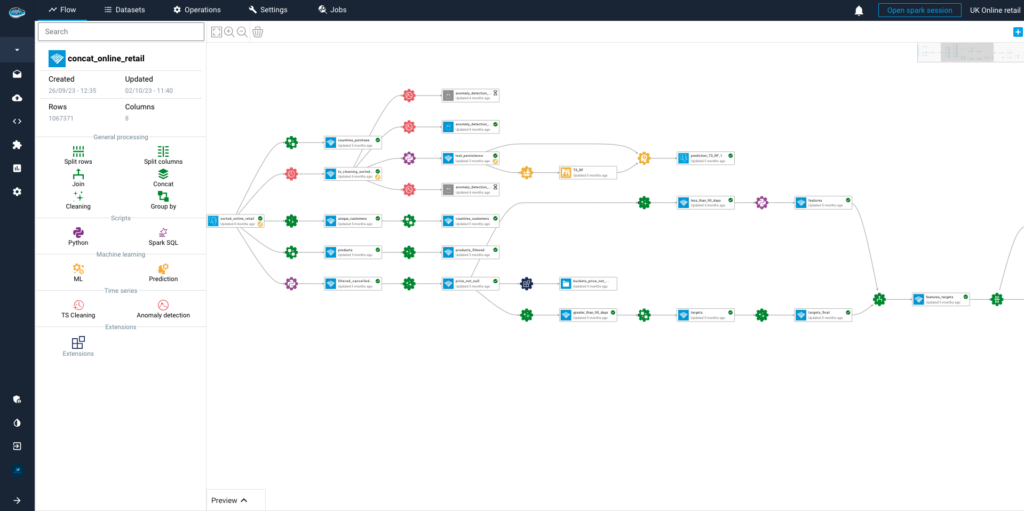

papAI is a scalable, modular, and automated AI platform designed for end-to-end ML lifecycle management, seamlessly integrating with existing infrastructures.

Businesses may use papAI solutions to industrialize and execute AI and data science projects. It is collaborative by nature and was created to support cooperation on a single platform. The platform’s interface makes it possible for teams to collaborate on challenging tasks.

Several machine learning approaches, model deployment choices, data exploration and visualization tools, data cleaning, and pre-processing capabilities are a few of these features.

Here’s an in-depth look at the key features and advantages of this innovative solution:

Simplified Model Implementation

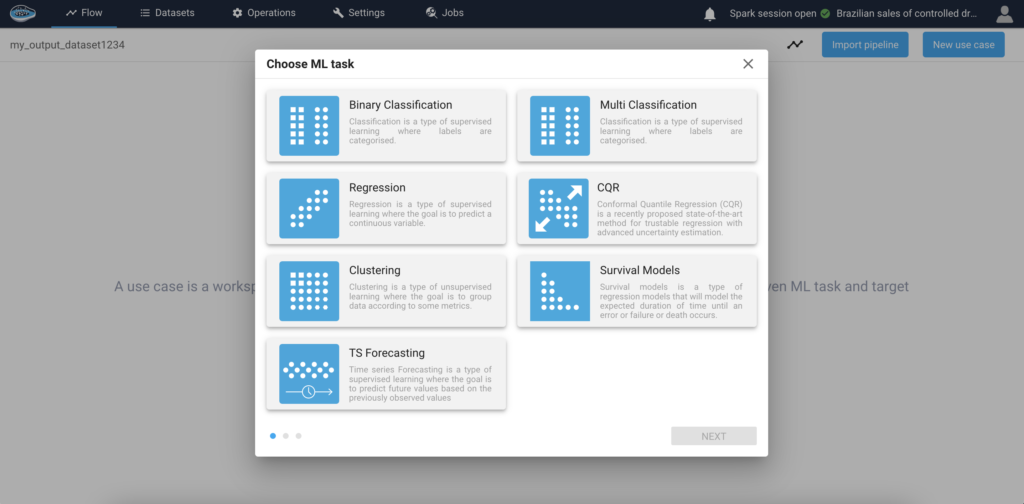

papAI Solution greatly simplifies the machine learning model installation procedure by utilising the Model Hub. The main source of pre-built, deployment-ready models from papAI is the Model Hub (which includes Binary Classification, Regression model, Clustering, Ts Forecasting, etc.). Consequently, companies no longer need to start from scratch when developing models. People who use the papAI solution frequently save 90% of their time when implementing AI concepts, per our most recent survey.

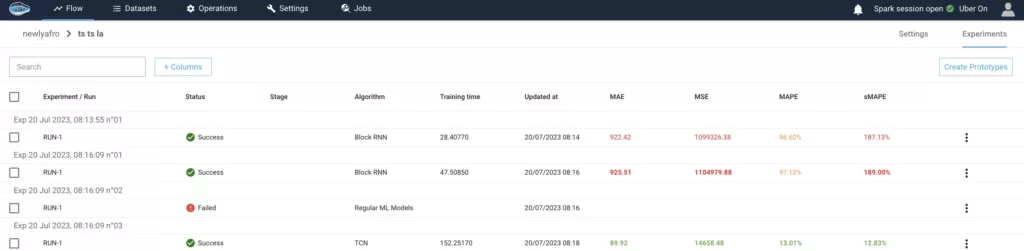

Efficient Model Monitoring & Tracking

With the range of monitoring tools provided by papAI Solution, organisations can keep an eye on the most crucial model performance metrics in real time. Metrics like accuracy, precision, and other relevant indicators can be used by users to monitor the model’s performance and spot any potential issues or deviations. This continuous monitoring guarantees high-quality outputs and makes it possible to proactively identify any decline in model performance. We have shown our clients accuracy of up to 98%.

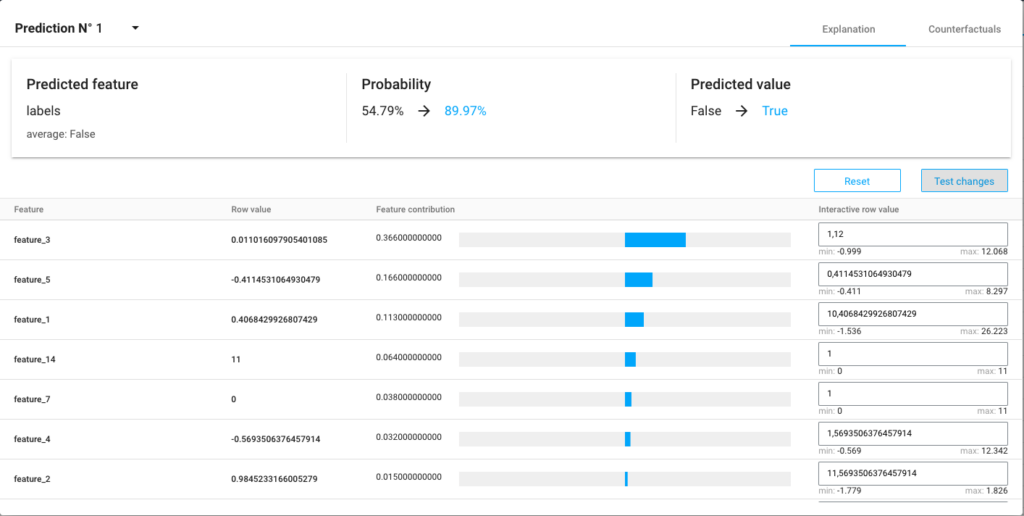

Enhanced Interpretability and Explainability of the Model

In the past, deep learning and advanced machine learning models have been perceived as “black boxes,” making it challenging to understand how they produce predictions. papAI solution uses state-of-the-art model explainability techniques to directly solve this issue. It highlights the crucial components and traits that influence forecasts and provides insights into the inner workings of the models. The puzzle of how AI models predict the future has been resolved by papAI, which allows stakeholders to see precisely how these algorithms make judgements.

What Makes papAI Unique?

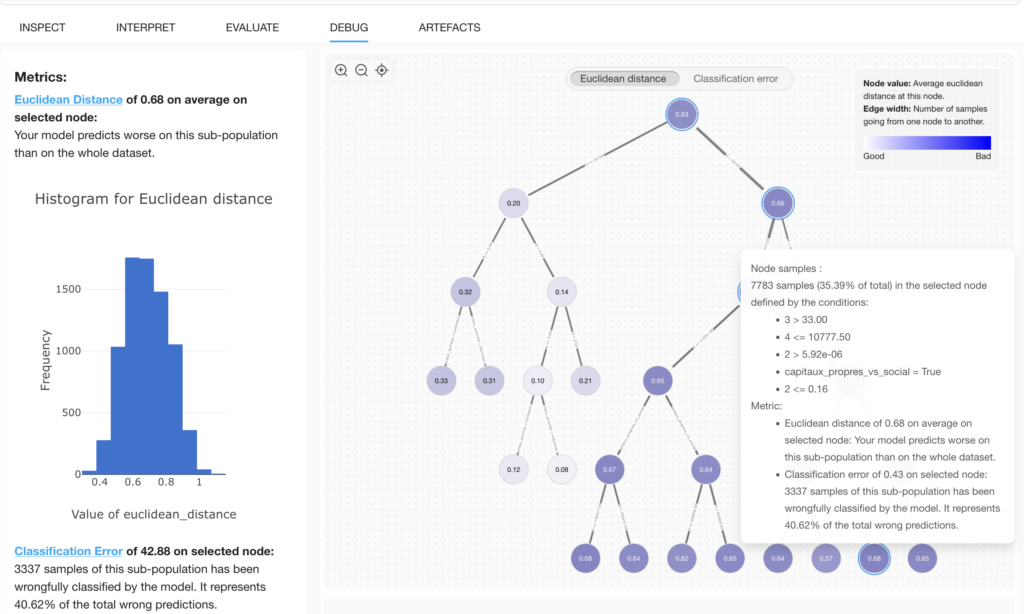

Error Analysis Tree for fairness and transparency

Beyond conventional bias identification, papAI’s Error Analysis Tree for Fairness and Transparency is a sophisticated tool that automatically recognizes and comprehends model faults. Businesses can find hidden problems in a model’s predictions by using this method to break down model performance by dividing the data into subgroups and identifying regions of high inaccuracy.

This analysis tool ensures that fairness and transparency are prioritized by not only assessing model performance across various groups but also identifying specific instances where the model underperforms, making it easier to address issues systematically.

- Beyond Equity measures: The Error Analysis Tree isn’t limited to merely equity-related issues, in contrast to traditional bias analysis, which usually concentrates on equity measures, which assess whether the model treats particular groups unjustly (for example, based on gender, ethnicity, or socioeconomic status). It offers a more comprehensive perspective, looking at performance across a range of data attributes rather than simply pre-established protected groups.

- Root Cause Analysis Without Predefined Groups: Unlike bias analysis tools that require users to define protected groups (such as specific demographic or identity groups) beforehand, the Error Analysis Tree autonomously explores the data to discover and highlight the real sources of errors. It offers a deeper, data-driven exploration of where and why the model fails, providing more accurate insights into underlying problems.

- Performance Improvement Focus: While bias analysis is often focused on measuring discrepancies between groups, error analysis is more performance-oriented. The goal of error analysis is not merely to detect whether disparities exist between groups, but to identify specific areas where the model is weak or inaccurate. This facilitates targeted improvements to the model, making it more effective and fair across all subgroups.

Leverage papAI's AI Capabilities for Superior Model Performance

Learn how papAI solution makes it easier to deploy and manage machine learning models so you can easily realize their full potential.

During the demo, our AI expert will walk you through papAI’s sophisticated features, simplified workflow, and integration to ensure you fully grasp its potential. Use papAI to transform your AI company’s strategy.

or Activate your free session today and see how our AI-driven platform can elevate your AI transformation journey.

Because it involves aligning teams, processes, and governance across an organization. Successful AI scaling requires not only robust technology but also leadership buy-in, cross-department collaboration, and cultural readiness to adopt new workflows and trust AI-driven decisions

AI is built on data, but quality is more important than quantity. Particularly when privacy concerns and data access issues increase, intelligent data curation combined with synthetic and distilled datasets can increase model accuracy and save costs.

Leaders that are prepared for AI are aware of the technology and its strategic implications. They establish clear objectives, encourage collaboration between data teams and business divisions, and develop a culture that values innovation, governance, and data-driven decision-making.

through utilising intelligent deployment techniques in cloud and edge environments, optimising resource utilisation, and giving priority to AI projects with quantifiable business effect. Efficiency is increased and budget overruns are avoided with the aid of progressive scaling and ongoing ROI monitoring.

Interested in discovering papAI?

Watch our platform in action now

Why is Deployment Speed the New 2026 AI Moat?

Why is Deployment Speed the New 2026 AI Moat? The...

Read MoreWe Don’t Just Build AI, We Deliver Measurable Impact

We Don’t Just Build AI, We Deliver Measurable Impact Join...

Read MoreAI’s Role in Translating Complex Defence Documentation

AI’s Role in Translating Complex Defence Documentation The defence sector...

Read More