Why Should You Consolidate Your AI Tools for Faster Scaling?...

Read MoreWhy is Deployment Speed the New 2026 AI Moat?

Table of Contents

ToggleThe traditional belief that massive data centers and sprawling infrastructure define a company’s AI dominance is rapidly fading. In 2026, we’ve reached a tipping point where “bigger” no longer translates to “better.” As AI tools become more accessible, the real competitive edge has shifted from the sheer volume of resources you own to how quickly you can turn a creative spark into a functional, deployed solution.

41% of senior executives admit that delayed AI adoption is causing them to fall behind their competitors, while 47% feel their organization is progressing “too slowly” despite active investments. (Source: McKinsey / Zapier 2026 Survey Data).

This article explores why the era of industrial-scale AI is giving way to a new age of agility, where the ability to experiment, iterate, and launch at high velocity is the only sustainable moat left in a hyper-accelerated market.

From Data Accumulation to Rapid Orchestration

The concept of competitive advantage in the current artificial intelligence environment has fundamentally changed from resource accumulation to operational velocity. In the past, the “scaling laws” dictated that larger datasets and more processing capacity would always lead to better performance; now, as frontier models become more commercialized, the strategic turning point has moved toward deployment speed.

The lag between theoretical innovation and market utility is successfully decreased by organizations that adopt a “speed-first” design. Because of this flexibility, businesses may avoid the diminishing returns of huge, monolithic infrastructure and instead adopt a lean, iterative strategy that puts real-time adaptability ahead of static, wide-scale deployment.

Traditional management structures must be drastically reorganized into decentralized, AI-powered units in order to implement this strategic shift. A shift toward “Dynamic Capability Theory,” which holds that a company’s success depends on its capacity to integrate, develop, and reconfigure internal and external competencies to handle quickly changing surroundings, is shown in the preference for speed above scale.

Innovation in this context is a high-velocity, ongoing cycle rather than a single occurrence. The only long-term competitive advantage in intelligence is the systemic capacity to innovate more quickly than the market can commoditize, hence making execution speed the ultimate barrier to entrance as the barrier to entry continues to decline.

Why does the Commoditization of AI Models Accelerate the Necessity for Rapid Deployment?

The competitive environment has been drastically changed by the quick commoditization of AI models, which has made execution velocity the main source of value instead of model ownership. The ability to create or get high-parameter foundation models used to be a major barrier to entry, but this advantage has been offset by the growth of open-source designs and the standardization of high-performance APIs.

The “intelligence” itself stops being a distinctive differentiator when advanced intelligence becomes a commonplace utility, like electricity or cloud computing. As a result, businesses can no longer depend on a particular model’s performance to keep market leadership because rivals may obtain similar skills quite instantly.

88% of CEOs now rank “deployment velocity” as a more important KPI than “model accuracy,” acknowledging that a 90% accurate model deployed today is more valuable than a 95% accurate model deployed next quarter. (Source: McKinsey Global Institute / CEO Survey 2026)

The strategic focus must inevitably shift to deployment speed as the new success factor when these models approach functional parity. The organizational capacity to incorporate these technologies into production environments more quickly than the market can react creates the “moat” in a setting where the underlying technology is a commodity.

The fact that a technical advantage’s shelf life in 2026 is assessed in weeks rather than years is what motivates this need. Businesses with the architectural flexibility to implement, test, and refine commoditized models in real-time can seize fleeting market openings and achieve a “first-mover” advantage that slower-moving, infrastructure-heavy competitors cannot match.

What are the Best Practices for Building an AI-ready Architecture for Rapid Deployment?

1- Orchestrating Agentic Swarm

Organizational theory and computational deployment have fundamentally changed as decentralized “agentic swarms” replace monolithic artificial intelligence frameworks. In the past, corporate AI strategy was defined by the creation of unique, all-inclusive models intended to handle a wide range of internal needs. However, these enormous systems’ intrinsic inertia frequently led to a large “deployment lag,” in which the technology became outdated before it reached operational maturity.

In 2026, the consensus among academics has shifted in favor of modularity, which permits independent, task-specific agents with collective orchestration capabilities. By employing a swarm-based technique, businesses may “hot-swap” particular components as better models emerge without upsetting the larger ecosystem, hence reducing the risks associated with infrastructure tethering.

2- Automated Governance

Technological integration has typically been hampered by traditional corporate governance and security procedures, which can need multi-quarter cycles for thorough risk assessment. “Fast-Track” Governance, a system built on pre-screened AI sandboxes and automated guardrails, is replacing these antiquated frameworks in the hyper-accelerated world of 2026. This paradigm change recognizes that the quick development of generative AI cannot coexist with static, manual evaluations.

Organizations may enable a smooth transition from prototype to production-ready pilot in a matter of days by immediately integrating compliance requirements into the development environment. This suggests a shift to “Compliance-as-Code,” where safety parameters are programmatically enforced throughout the AI agent’s lifespan, rather than a drop in security standards.

3- Prioritizing Small Language Models (SLMs) for Edge Deployment

The explicit preference for Small Language Models (SLMs) over large, latent foundation models is indicative of the development of AI deployment philosophy that emphasizes “computational parsimony.” Even while Large Language Models have a vast amount of general knowledge, their high inference costs and high latency often make real-time application at the edge difficult. Organizations can use a fraction of the hardware resources while achieving higher performance in particular domains by moving toward efficient, task-specific SLMs.

These models may be deployed closer to the end-user, directly on local servers or mobile devices, thanks to their high-velocity execution architecture, which eliminates the delays associated with cloud-based processing. For 2026 applications, where immediate reaction times are the benchmark for utility, this latency reduction is essential.

4-Strategy-as-Code

A new management concept called “Strategy-as-Code” aims to close the gap between technical implementation and high-level executive purpose. The manual, labor-intensive process of translating corporate objectives into functional needs in traditional organizational structures is prone to “semantic drift”, a phenomenon where the initial strategic vision gets diluted via successive levels of middle management and engineers. Businesses may include their strategic pillars straight into the deployment pipeline by using AI to automate this translation process.

As a result, a reflexive system is created in which business goals are programmatically matched with technical outcomes. The combination of “automated requirement engineering” and “intent-based networking” guarantees that each agent deployed contributes to a particular, measurable business objective.

Which stakeholders drive the acceleration of AI deployment within an organization?

1- The CEO

The CEO is the main judge of organizational risk and culture in the 2026 environment. Their responsibilities have changed from managing conventional, multi-year scaling to encouraging a culture of quick innovation. The CEO gives teams the institutional legitimacy they need to get over bureaucratic, slow-moving bureaucracies by classifying AI integration as a basic survival mandate rather than just a technical endeavor. This “political cover” is crucial because it enables the organization to put deployment velocity ahead of the antiquated goal of complete perfection.

The CEO turns the C-suite from a bottleneck into an accelerator by exhibiting this conduct. Their impact guarantees that the company’s “risk appetite” is adjusted for the AI era, where the biggest risk is not a botched experiment but rather the inertia of moving too slowly as rivals gain market share through quicker iteration cycles.

2- The CDO/CAIO

The crucial connection between executive purpose and technology implementation is the Chief Digital Officer, often known as the Chief AI Officer. Instead of the size of their data lake, “Cycle Time to Value” will be used to gauge their performance in 2026. By institutionalizing “Strategy-as-Code”, an approach that use AI to automatically transform high-level business goals into deployable technical requirements, they promote speed. By doing this, the “semantic drift” that frequently results from manual handoffs between engineering and leadership is eliminated. The CDO makes sure the company stays technologically agnostic by substituting “Agentic Swarms” for monolithic development cycles. This allows the company to “hot-swap” specialized AI agents when better models become available.

the CDO is the architect of the Automated Governance “Sandbox.” In 2026, 60% of CDOs have formal responsibility for governing autonomous agents. Rather than traditional, month-long security reviews, they implement programmatic guardrails that enforce compliance, bias-checking, and safety in real-time

3- The Data Team

From building to orchestration and liquidity, the Data Team’s function has fundamentally changed. The “data preparation” needed to feed the AI model is often the main bottleneck in a speed-first approach, rather than the model itself. High-performing data teams will concentrate on developing “AI-Ready Data Products” in 2026, curated, real-time streams of data that have already undergone governance and quality checks. They supply the “high-fidelity fuel” that enables autonomous agents to operate without human assistance by switching from manual data manipulation to automated data pipelines. This change guarantees that data is a dynamic, high-velocity resource rather than a static asset.

This is thought of as the shift to “Context Engineering.” These days, data scientists spend more time improving the context and “embeddings” that provide AI agents with their unique business insight rather than fine-tuning massive models. The data team avoids the “hallucinations” and mistakes that usually impede deployment by mastering upstream monitoring, which guarantees that the data agents rely on is reliable and timely. Their power comes from their capacity to democratize intelligence access; by offering self-service data platforms, they enable non-technical departments to securely install their own specialized agents.

Datategy Magazine: AI Industrialization & Future Challenges

Datategy Magazine serves as your premier source for in-depth data insights, offering comprehensive coverage of the latest trends, strategies, and developments in the data industry. With a focus on AI industrialization and future challenges, we provide valuable analysis and expert commentary to help you stay ahead in this rapidly evolving landscape.

Streamlining AI with papAI: Key Benefits and Features

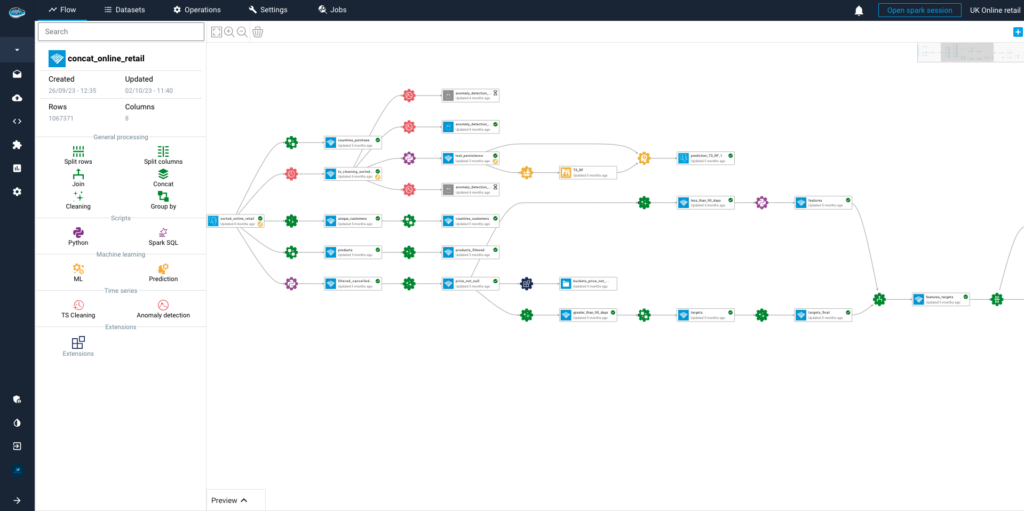

papAI is a flexible and scalable AI platform built to manage the entire machine learning lifecycle, from data preparation to model deployment, while easily fitting into your existing systems.

Designed to help companies industrialize their AI and data science projects, papAI promotes seamless collaboration by bringing teams together on one unified platform. Its user-friendly interface enables groups to work side by side, tackling complex challenges efficiently.

The platform supports a wide range of machine learning methods and offers various options for deploying models. It also includes powerful tools for exploring data, cleaning and preparing datasets, and visualizing results, all aimed at simplifying workflows and accelerating AI-driven outcomes.

Here’s an in-depth look at the key features and advantages of this innovative solution:

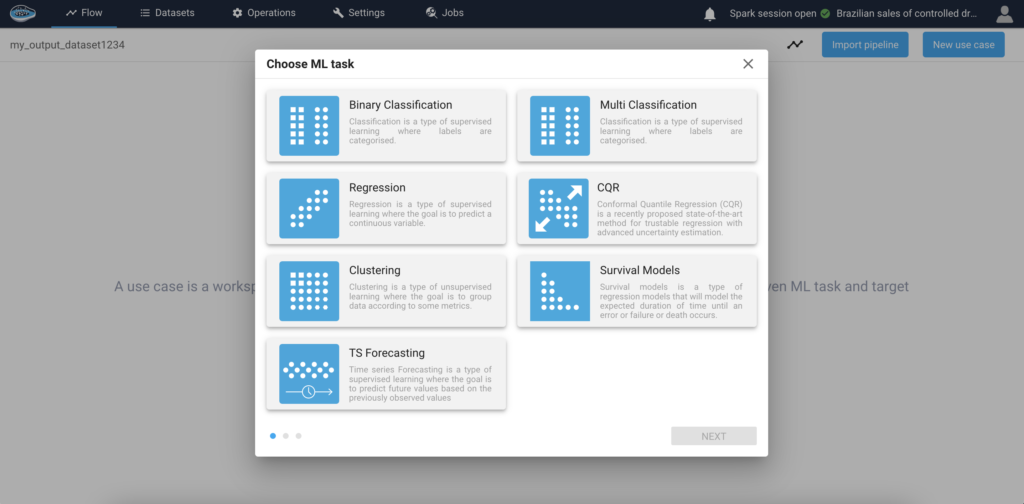

Simplified Model Implementation

papAI Solution greatly simplifies the machine learning model installation procedure by utilising the Model Hub. The main source of pre-built, deployment-ready models from papAI is the Model Hub (which includes Binary Classification, Regression model, Clustering, Ts Forecasting, etc.). Consequently, companies no longer need to start from scratch when developing models. People who use the papAI solution frequently save 90% of their time when implementing AI concepts, per our most recent survey.

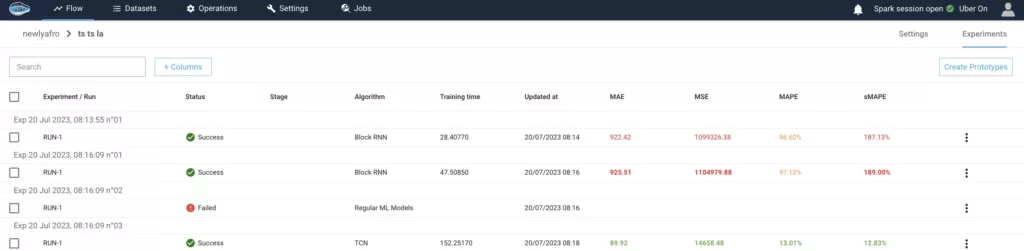

Efficient Model Monitoring & Tracking

With the range of monitoring tools provided by papAI Solution, organisations can keep an eye on the most crucial model performance metrics in real time. Metrics like accuracy, precision, and other relevant indicators can be used by users to monitor the model’s performance and spot any potential issues or deviations. This continuous monitoring guarantees high-quality outputs and makes it possible to proactively identify any decline in model performance. We have shown our clients accuracy of up to 98%.

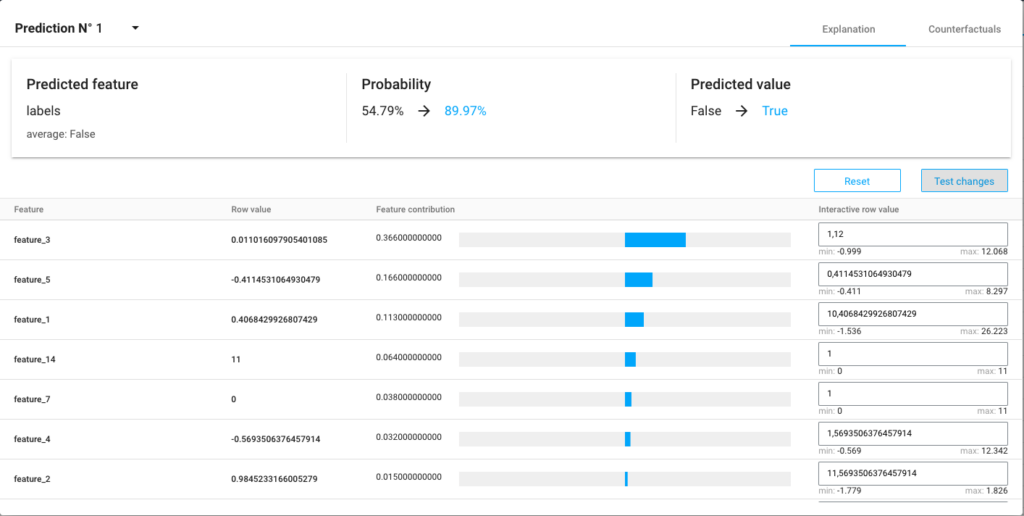

Enhanced Interpretability and Explainability of the Model

In the past, deep learning and advanced machine learning models have been perceived as “black boxes,” making it challenging to understand how they produce predictions. papAI solution uses state-of-the-art model explainability techniques to directly solve this issue. It highlights the crucial components and traits that influence forecasts and provides insights into the inner workings of the models. The puzzle of how AI models predict the future has been resolved by papAI, which allows stakeholders to see precisely how these algorithms make judgements.

What Makes papAI Unique?

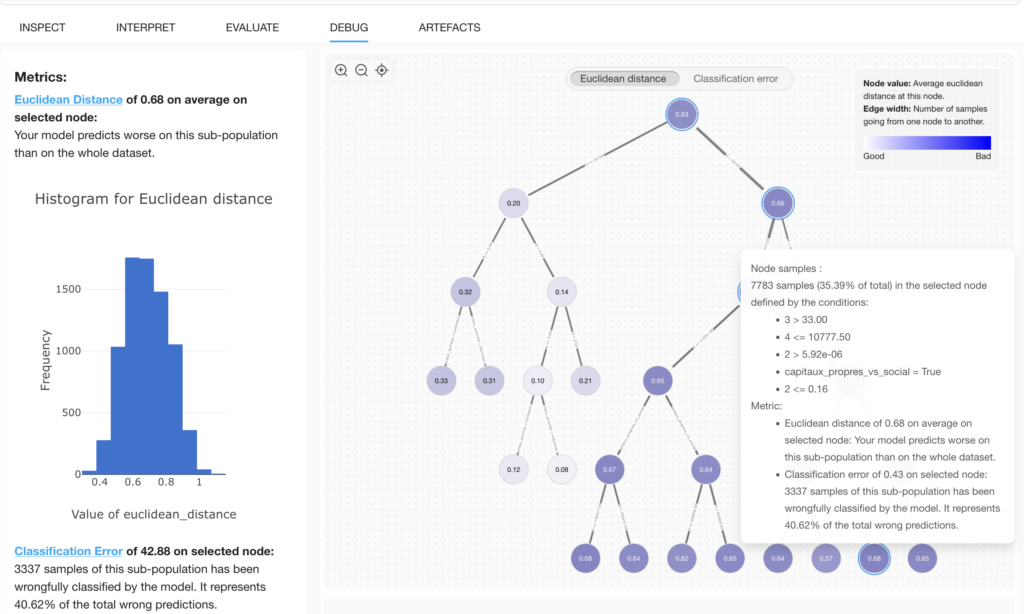

Error Analysis Tree for fairness and transparency

Beyond conventional bias identification, papAI’s Error Analysis Tree for Fairness and Transparency is a sophisticated tool that automatically recognizes and comprehends model faults. Businesses can find hidden problems in a model’s predictions by using this method to break down model performance by dividing the data into subgroups and identifying regions of high inaccuracy.

This analysis tool ensures that fairness and transparency are prioritized by not only assessing model performance across various groups but also identifying specific instances where the model underperforms, making it easier to address issues systematically.

- Beyond Equity measures: The Error Analysis Tree isn’t limited to merely equity-related issues, in contrast to traditional bias analysis, which usually concentrates on equity measures, which assess whether the model treats particular groups unjustly (for example, based on gender, ethnicity, or socioeconomic status). It offers a more comprehensive perspective, looking at performance across a range of data attributes rather than simply pre-established protected groups.

- Root Cause Analysis Without Predefined Groups: Unlike bias analysis tools that require users to define protected groups (such as specific demographic or identity groups) beforehand, the Error Analysis Tree autonomously explores the data to discover and highlight the real sources of errors. It offers a deeper, data-driven exploration of where and why the model fails, providing more accurate insights into underlying problems.

- Performance Improvement Focus: While bias analysis is often focused on measuring discrepancies between groups, error analysis is more performance-oriented. The goal of error analysis is not merely to detect whether disparities exist between groups, but to identify specific areas where the model is weak or inaccurate. This facilitates targeted improvements to the model, making it more effective and fair across all subgroups.

The New AI Moat Formula 2026: Speed × Adaptation × Reliability

The new AI moat that emerges in 2026 is formed by a company’s capacity to move quickly without damaging things, rather than by model size or unique data. Since the victors are now those that can deploy, test, and iterate AI systems in real time, speed has emerged as the first multiplier in this formula. Organizations that shorten the time between concept and execution have a compounding advantage in a world where innovations appear every few weeks. Speed is now a strategic tool that establishes who leads and who follows, rather than merely an operational statistic.

In a market where AI models have become commoditized, the performance gap between leading tools has narrowed to a negligible margin. Consequently, competitive advantage is no longer found in the model itself, but in the speed at which it is integrated into a live environment

Beyond just approving budgets, the CEO must redefine the organization’s risk appetite, shifting the culture from “cautious scaling” to “rapid experimentation.” By declaring AI a survival mandate and providing “political cover” for teams to bypass legacy bureaucratic hurdles, the CEO ensures that speed becomes a core cultural value rather than just a technical goal.

Despite their strength, big models frequently have severe latency and high computation costs. SLMs may be installed near the “edge” (closer to the end-user) since they are tailored for certain activities and utilize a lot less power. For real-time applications, where every millisecond of latency affects user experience and operational efficiency, this lowers the time between a business demand and an AI-generated answer to almost zero.

Strategy as code is the process of applying AI to directly integrate high-level business objectives into the technological deployment pipeline. Businesses may avoid “human-in-the-loop” delays and “semantic drift” between technical execution and leadership vision by automating the conversion of executive intent into machine-readable requirements. This guarantees that all deployed agents are in perfect alignment with the company’s North Star criteria right from the start.

Interested in discovering papAI?

Watch our platform in action now

Why is Deployment Speed the New 2026 AI Moat?

Why is Deployment Speed the New 2026 AI Moat? The...

Read MoreWe Don’t Just Build AI, We Deliver Measurable Impact

We Don’t Just Build AI, We Deliver Measurable Impact Join...

Read MoreAI’s Role in Translating Complex Defence Documentation

AI’s Role in Translating Complex Defence Documentation The defence sector...

Read More